LLMOPS Industry Ready Projects

₹6500.00

(inclusive of GST)

Course Overview

5 Months

Start Date

July 12, 2025

Saturday & Sunday 2 to 5 Pm

Master LLMops To ace your Ai Career

-

From Basics to Mastery: Understand the full LLMOps pipeline from foundational NLP to cutting-edge generative AI.

-

Hands-On Projects: Deploy RAG pipelines, fine-tune open-source LLMs, integrate vector databases, and build LangChain-based agent workflows.

-

Production-Ready Focus: Learn how to dockerize, monitor, and scale LLM apps with CI/CD, testing, logging, and prompt evaluation.

-

Multimodal AI: Go beyond text work with image, voice, and PDF inputs using models like Gemini, GPT-4V, and LLaVA.

-

Tool Stack You’ll Master: LangChain, LlamaIndex, Hugging Face, ChromaDB, Weaviate, FastAPI, Docker, GitHub Actions, and more.

-

Build Your Own AI Agent: Final project lets you develop, deploy, and scale your own autonomous LLM-powered app.

-

Mentorship & Support: Get guided by experts with 1-on-1 mentorship, code reviews, and real-time doubt resolution.

What You Will Learn

LLMs in Action

Learn prompt engineering, embeddings, vector search, fine-tuning, and how to use APIs like GPT, Claude, and Gemini.

Retrieval-Augmented Generation (RAG)

Build production-ready RAG pipelines using LangChain, LlamaIndex, and vector databases.

Fine-Tuning & LLMOps

Fine-tune open-source models and deploy them with proper CI/CD, dockerization, and performance monitoring.

Agents & Tool Use

Create autonomous agents that can search, code, summarise, and use external tools via LangChain and Hugging Face.

Multimodal GenAI

Work with voice, images, and PDFs using GPT-4V, LLaVA, and other vision-language models.

Production & Scaling

Deploy robust LLM apps with FastAPI, Docker, and GitHub Actions—ready for real-world traffic.

Projects You'll Build

GenAI PDF Assistant

Build a chat assistant that reads and understands complex PDF documents using LLMs + embeddings.

Google Search Agent

Create an autonomous agent that can perform real-time Google searches and summarize the results using LLMs.

Voice-Based Chatbot

Build a multimodal assistant that listens to your voice, processes queries, and speaks back with real-time answers.

LangChain-Powered RAG App

Develop a complete Retrieval-Augmented Generation app using LangChain, vector stores, and OpenAI APIs.

End-to-End AI Chat App

Design and deploy a full-stack chat interface (with FastAPI + Streamlit) integrating real-time LLM responses.

Custom Fine-Tuned LLM

Fine-tune an open-source LLM on your own dataset and deploy it with Docker and GitHub Actions.

Multimodal Q&A with Vision Models

Use models like GPT-4V and LLaVA to answer questions based on image inputs—great for product demos or education.

AI Agent that Codes

Build an AI agent that can read problem statements, write Python code, and even debug itself using tools.

End-to-End LLMOps Pipeline

Take any LLM project to production with logging, monitoring, CI/CD, and performance checks.

Course Curriculum

Complete Project Setup for AI Development

- 1Installing Anaconda / Miniconda

- 2Python Virtual Environment Setup — Best Practices for AI Projects

- 3Managing Dependencies with pip, uv, requirements.txt

- 4IDE Setup: VSCode, Jupyter

- 5Standard AI/ML Project Folder Structure (src, notebooks, configs, utils)

- 6Modular Codebase Design (functions, classes, packages)

- 7Configuration Management using YAML, JSON, dotenv

- 8Best Practices: Notebooks vs Scripts

- 9Git & GitHub Setup for AI Projects

- 10Docker Setup for Containerization

- 11Branching Strategies (feature, dev, main)

- 12Commit Message Conventions with commitizen / pre-commit

- 13Collaborating with Teams using GitHub Projects

- 14Importance of Logging in AI Projects

- 15Using Python’s logging module

- 16Log Levels: DEBUG, INFO, WARNING, ERROR, CRITICAL

- 17Writing Logs to File, Console, and Rotating Logs

- 18Understanding Built-in & Custom Exceptions in Python

- 19try-except-finally Blocks — Robust Usage

- 20Creating Custom Exception Classes

- 21Centralized Exception Handling Strategy

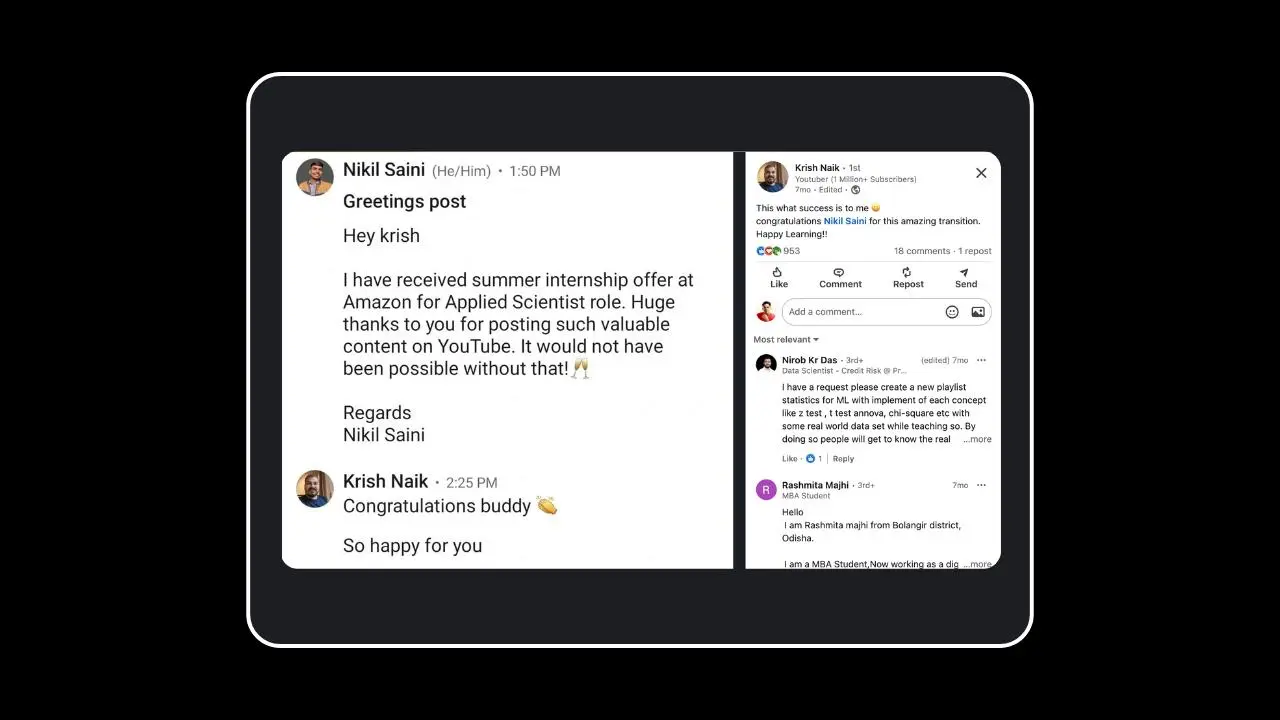

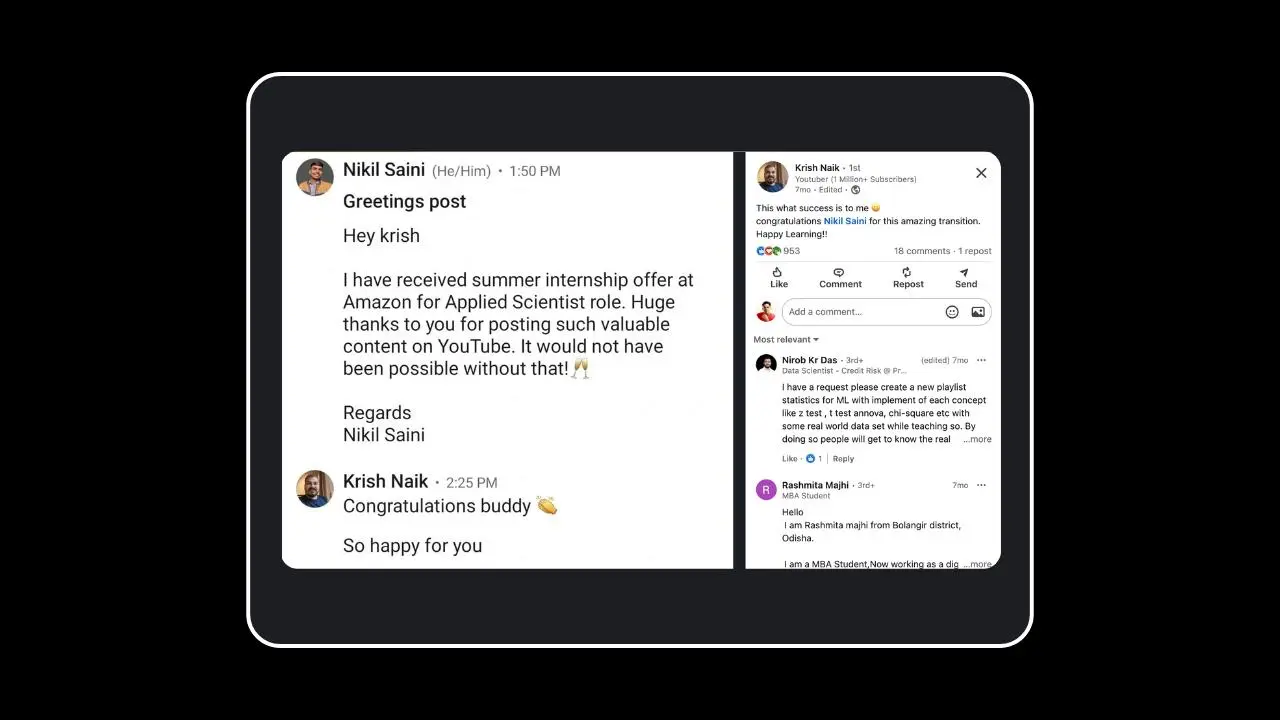

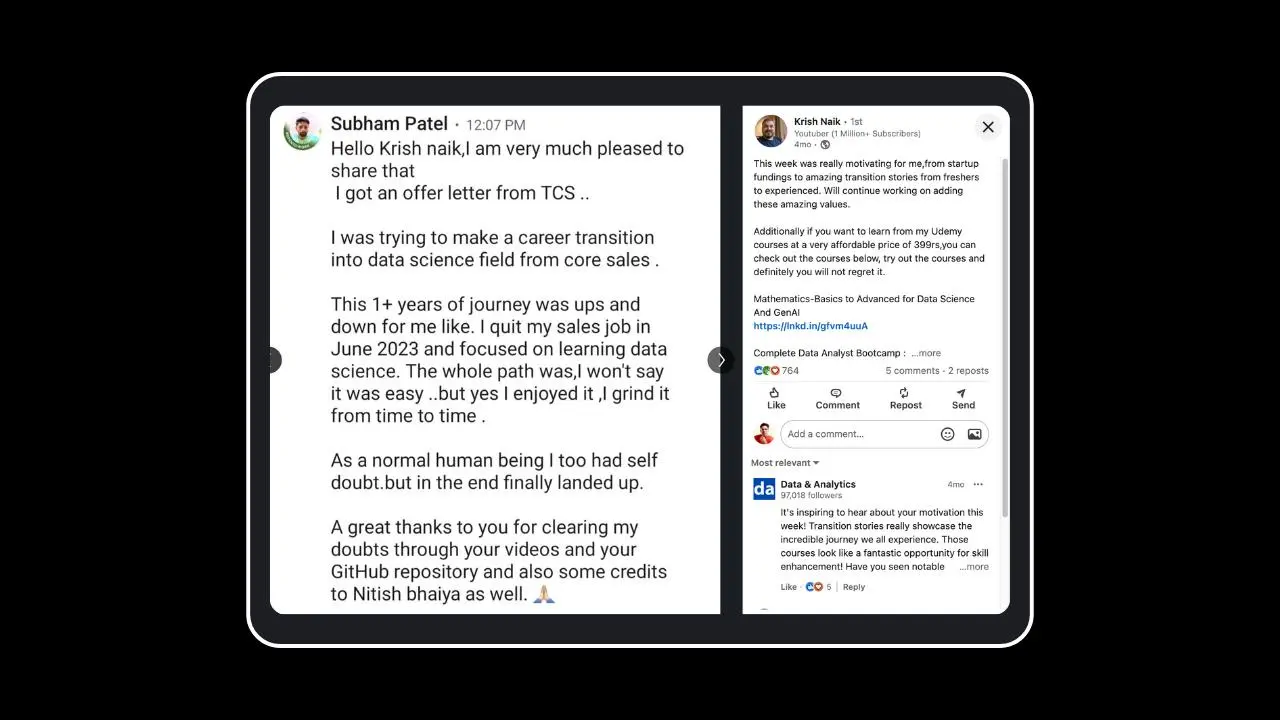

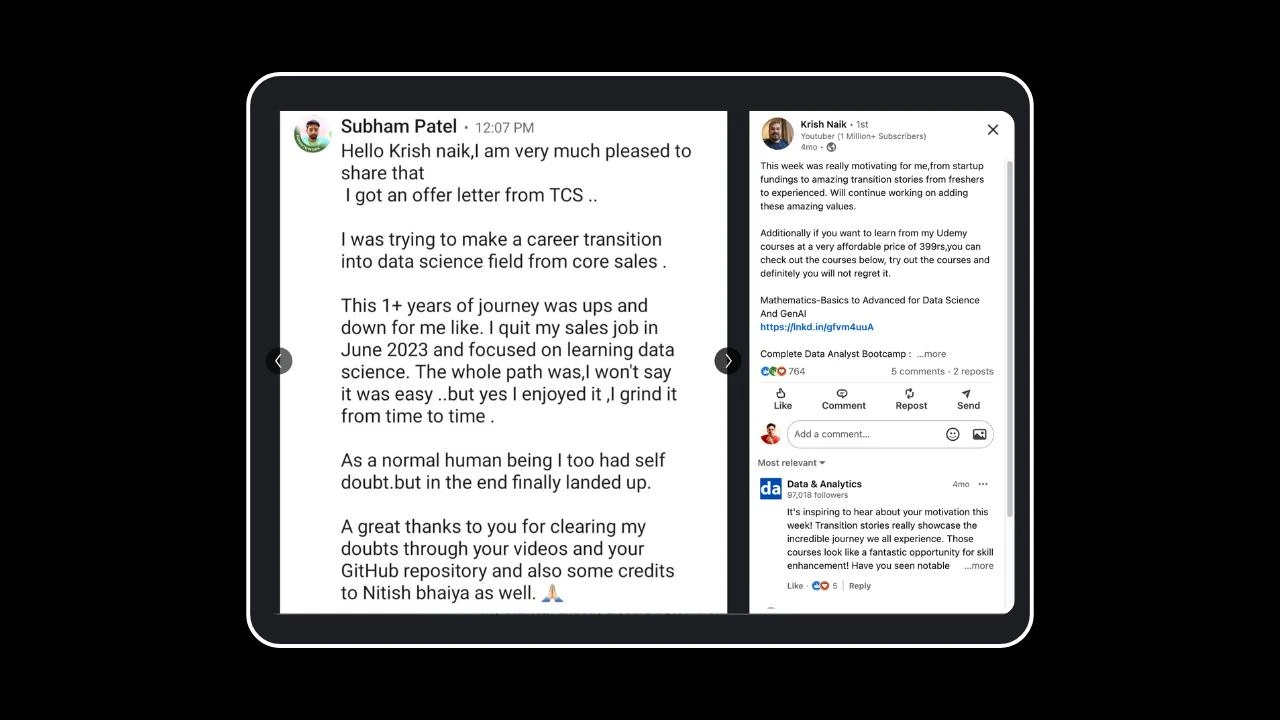

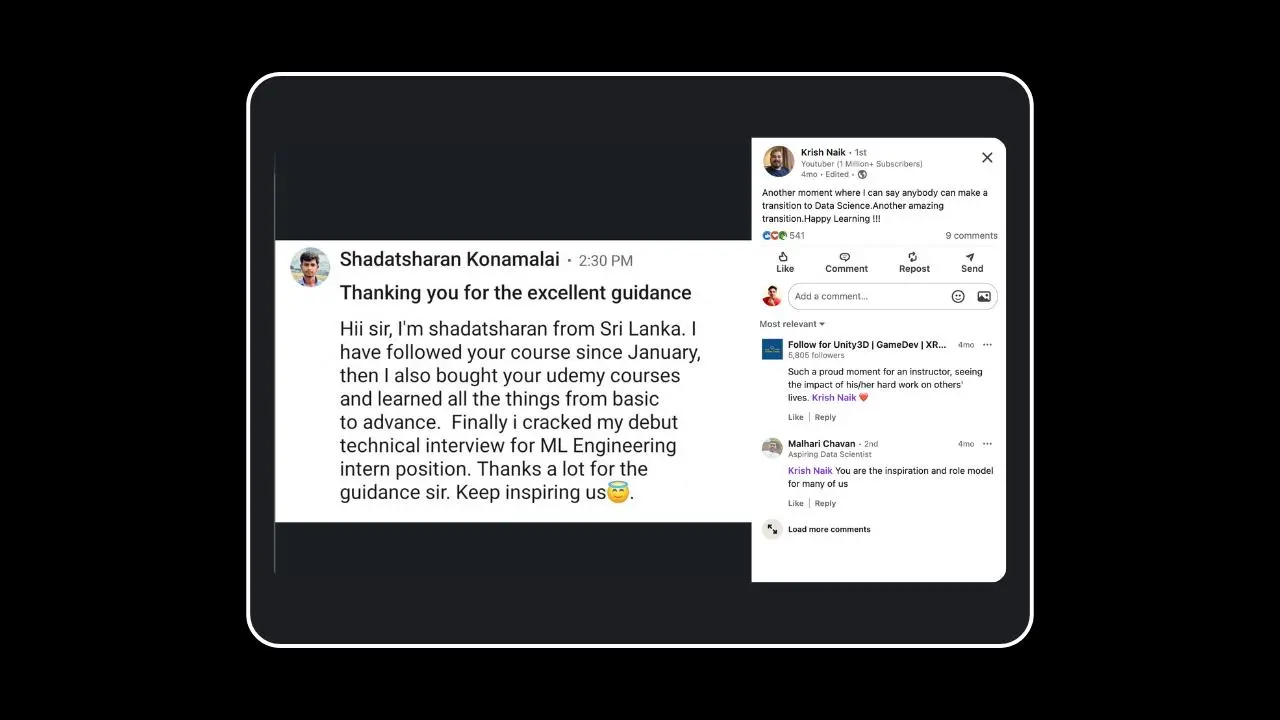

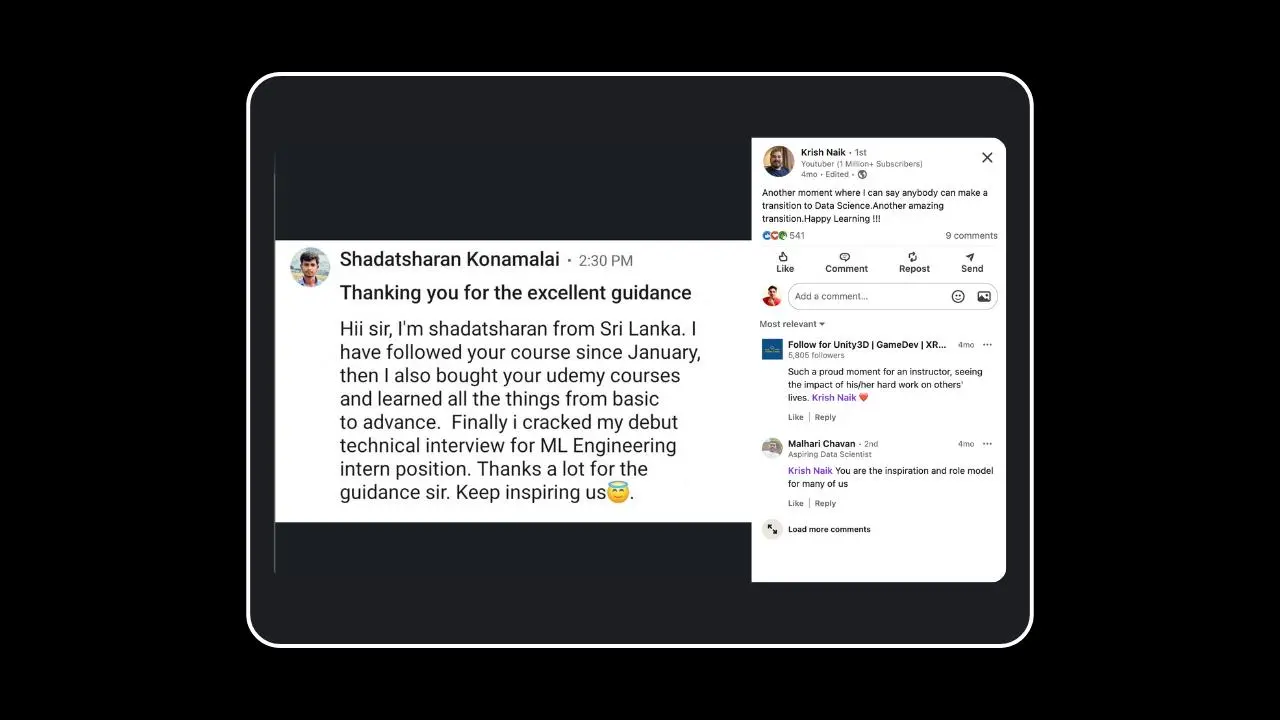

Learn from Industry Experts

Flexible Learning For Everyone

Flexible Online Learning Format

Learn at your own pace and on your schedule with live and recorded course access. Our online LLMops course is designed for busy professionals and students to make learning tech convenient and effortless.

Learn from GenAI & LLM Experts

Get mentored by professionals who’ve built real-world Generative AI applications using LLMs, LangChain, and Hugging Face not just theory, but battle-tested insights.

Cutting-Edge Generative AI Curriculum

Stay ahead with a curriculum designed around the latest advancements in Generative AI, LLMs, LangChain, vector databases, and real-world agentic AI applications updated frequently to match the pace of innovation.

Career Support for Generative AI Professionals

Get tailored career guidance, including resume building, mock interviews, and job referrals, all focused on helping you break into top roles in Generative AI, LLM engineering, and applied AI development.

Premium Community Access

Network and collaborate with like-minded professionals, and get doubt resolution from experts and mentors.